Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Tomasz Tunguz

GPT-5 achieves 94.6% accuracy on AIME 2025, suggesting near-human mathematical reasoning.

Yet ask it to query your database, and success rates plummet to the teens.

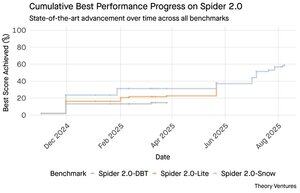

The Spider 2.0 benchmarks reveal a yawning gap in AI capabilities. Spider 2.0 is a comprehensive text-to-SQL benchmark that tests AI models’ ability to generate accurate SQL queries from natural language questions across real-world databases.

While large language models have conquered knowledge work in mathematics, coding, and reasoning, text-to-SQL remains stubbornly difficult.

The three Spider 2.0 benchmarks test real-world database querying across different environments. Spider 2.0-Snow uses Snowflake databases with 547 test examples, peaking at 59.05% accuracy.

Spider 2.0-Lite spans BigQuery, Snowflake, and SQLite with another 547 examples, reaching only 37.84%. Spider 2.0-DBT tests code generation against DuckDB with 68 examples, topping out at 39.71%.

This performance gap isn’t for lack of trying. Since November 2024, 56 submissions from 12 model families have competed on these benchmarks.

Claude, OpenAI, DeepSeek, and others have all pushed their models against these tests. Progress has been steady, from roughly 2% to about 60%, in the last nine months.

The puzzle deepens when you consider SQL’s constraints. SQL has a limited vocabulary compared to English, which has 600,000 words, or programming languages that have much broader syntaxes and libraries to know. Plus there’s plenty of SQL out there to train on.

If anything, this should be easier than the open-ended reasoning tasks where models now excel.

Yet even perfect SQL generation wouldn’t solve the real business challenge. Every company defines “revenue” differently.

Marketing measures customer acquisition cost by campaign spend, sales calculates it using account executive costs, and finance includes fully-loaded employee expenses. These semantic differences create confusion that technical accuracy can’t resolve.

The Spider 2.0 results point to a fundamental truth about data work. Technical proficiency in SQL syntax is just the entry point.

The real challenge lies in business context. Understanding what the data means, how different teams define metrics, and when edge cases matter. As I wrote about in Semantic Cultivators, the bridge between raw data and business meaning requires human judgment that current AI can’t replicate.

8,8K

GPT-5 achieves 94.6% accuracy on AIME 2025, suggesting near-human mathematical reasoning.

Yet ask it to query your database, and success rates plummet to the teens.

The Spider 2.0 benchmarks reveal a yawning gap in AI capabilities. Spider 2.0is a comprehensive text-to-SQL benchmark that tests AI models’ ability to generate accurate SQL queries from natural language questions across real-world databases.

While large language models have conquered knowledge work in mathematics, coding, and reasoning, text-to-SQL remains stubbornly difficult.

The three Spider 2.0 benchmarks test real-world database querying across different environments. Spider 2.0-Snow uses Snowflake databases with 547 test examples, peaking at 59.05% accuracy.

Spider 2.0-Lite spans BigQuery, Snowflake, and SQLite with another 547 examples, reaching only 37.84%. Spider 2.0-DBT tests code generation against DuckDB with 68 examples, topping out at 39.71%.

This performance gap isn’t for lack of trying. Since November 2024, 56 submissions from 12 model families have competed on these benchmarks.

Claude, OpenAI, DeepSeek, and others have all pushed their models against these tests. Progress has been steady, from roughly 2% to about 60%, in the last nine months.

The puzzle deepens when you consider SQL’s constraints. SQL has a limited vocabulary compared to English, which has 600,000 words, or programming languages that have much broader syntaxes and libraries to know. Plus there’s plenty of SQL out there to train on.

If anything, this should be easier than the open-ended reasoning tasks where models now excel.

Yet even perfect SQL generation wouldn’t solve the real business challenge. Every company defines “revenue” differently.

Marketing measures customer acquisition cost by campaign spend, sales calculates it using account executive costs, and finance includes fully-loaded employee expenses. These semantic differences create confusion that technical accuracy can’t resolve.

The Spider 2.0 results point to a fundamental truth about data work. Technical proficiency in SQL syntax is just the entry point.

The real challenge lies in business context. Understanding what the data means, how different teams define metrics, and when edge cases matter. As I wrote about in Semantic Cultivators, the bridge between raw data and business meaning requires human judgment that current AI can’t replicate.

3,95K

Perplexity AI just made a $34.5b unsolicited offer for Google’s Chrome browser, attempting to capitalize on the pending antitrust ruling that could force Google to divest its browser business.

Comparing Chrome’s economics to Google’s existing Safari deal reveals why $34.5b undervalues the browser.

Google pays Apple $18-20b annually to remain Safari’s default search engine¹, serving approximately 850m users². This translates to $21 per user per year.

The Perplexity offer values Chrome at $32b, which is $9 per user per year for its 3.5b users³.

If Chrome users commanded the same terms as the Google/Apple Safari deal, the browser’s annual revenue potential would exceed $73b.

This data is based on public estimates but is an approximation.

This assumes that Google would pay a new owner of Chrome a similar scaled fee for the default search placement. Given a 5x to 6x market cap-to-revenue multiple, Chrome is worth somewhere between $172b and $630b, a far cry from the $34.5b offer.

Chrome dominates the market with 65% share⁴, compared to Safari’s 18%. A divestment would throw the search ads market into upheaval. The value of keeping advertiser budgets is hard to overstate for Google’s market cap & position in the ads ecosystem.

If forced to sell Chrome, Google would face an existential choice. Pay whatever it takes to remain the default search engine, or watch competitors turn its most valuable distribution channel into a cudgel against it.

How much is that worth? A significant premium to a simple revenue multiple.

¹ Bloomberg: Google’s Payments to Apple Reached $20 Billion in 2022 (

² ZipDo: Essential Apple Safari Statistics In 2024 (

³ Backlinko: Web Browser Market Share In 2025 (

⁴ Statcounter: Browser Market Share Worldwide (

7,97K

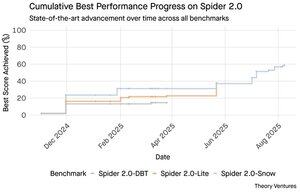

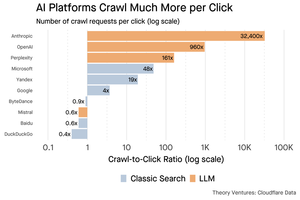

In 1999, the dotcoms were valued on traffic. IPO metrics revolved around eyeballs.

Then Google launched AdWords, an ad model predicated on clicks, & built a $273b business in 2024.

But that might all be about to change : Pew Research’s July 2025 studyreveals users click just 8% of search results with AI summaries, versus 15% without - a 47% reduction. Only 1% click through from within AI summaries.

Cloudflare data shows AI platforms crawl content far more than they refer traffic back : Anthropic crawls 32,400 pages for every 1 referral, while traditional search engines scan content just a couple times per visitor sent.

The expense of serving content to the AI crawlers may not be huge if it’s mostly text.

The bigger point is AI systems disintermediate the user & publisher relationship. Users prefer aggregated AI answers over clicking through websites to find their answers.

It’s logical that most websites should expect less traffic. How will your website & your business handle it?

Sources:

- Pew Research Center - Athena Chapekis, July 22, 2025 (

- Cloudflare: The crawl before the fall of referrals (

- Cloudflare Radar: AI Insights - Crawl to Refer Ratio (

- Podcast: The Shifting Value of Content in the AI Age (

9,69K

GPT-5 launched yesterday. 94.6% on AIME 2025. 74.9% on SWE-bench.

As we approach the upper bounds of these benchmarks, they die.

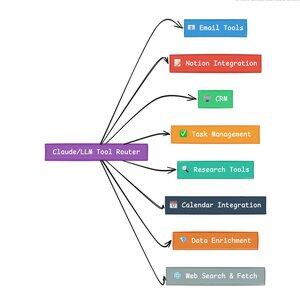

What makes GPT-5 and the next generation of models revolutionary isn’t their knowledge. It’s knowing how to act. For GPT-5 this happens at two levels. First, deciding which model to use. But second, and more importantly, through tool calling.

We’ve been living in an era where LLMs mastered knowledge retrieval & reassembly. Consumer search & coding, the initial killer applications, are fundamentally knowledge retrieval challenges. Both organize existing information in new ways.

We have climbed those hills and as a result competition is more intense than ever. Anthropic, OpenAI, and Google’s models are converging on similar capabilities. Chinese models and open source alternatives are continuing to push ever closer to state-of-the-art. Everyone can retrieve information. Everyone can generate text.

The new axis of competition? Tool-calling.

Tool-calling transforms LLMs from advisors to actors. It compensates for two critical model weaknesses that pure language models can’t overcome.

First, workflow orchestration. Models excel at single-shot responses but struggle with multi-step, stateful processes. Tools enable them to manage long workflows, tracking progress, handling errors, maintaining context across dozens of operations.

Second, system integration. LLMs live in a text-only world. Tools let them interface predictably with external systems like databases, APIs, and enterprise software, turning natural language into executable actions.

In the last month I’ve built 58 different AI tools.

Email processors. CRM integrators. Notion updaters. Research assistants. Each tool extends the model’s capabilities into a new domain.

The most important capability for AI is selecting the right tool quickly and correctly. Every misrouted step kills the entire workflow.

When I say “read this email from Y Combinator & find all the startups that are not in the CRM,” modern LLMs execute a complex sequence.

One command in English replaces an entire workflow. And this is just a simple one.

Even better, the model, properly set up with the right tools, can verify its own work that tasks were completed on time. This self-verification loop creates reliability in workflows that is hard to achieve otherwise.

Multiply this across hundreds of employees. Thousands of workflows. The productivity gains compound exponentially.

The winners in the future AI world will be the ones who are most sophisticated at orchestrating tools and routing the right queries. Every time. Once those workflows are predictable, that’s when we will all become agent managers.

3,17K

2025 is the year of agents, & the key capability of agents is calling tools.

When using Claude Code, I can tell the AI to sift through a newsletter, find all the links to startups, verify they exist in our CRM, with a single command. This might involve two or three different tools being called.

But here’s the problem: using a large foundation model for this is expensive, often rate-limited, & overpowered for a selection task.

What is the best way to build an agentic system with tool calling?

The answer lies in small action models. NVIDIA released a compelling paperarguing that “Small language models (SLMs) are sufficiently powerful, inherently more suitable, & necessarily more economical for many invocations in agentic systems.”

I’ve been testing different local models to validate a cost reduction exercise. I started with a Qwen3:30b parameter model, which works but can be quite slow because it’s such a big model, even though only 3 billion of those 30 billion parameters are active at any one time.

The NVIDIA paper recommends the Salesforce xLAM model – a different architecture called a large action model specifically designed for tool selection.

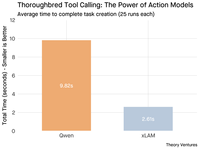

So, I ran a test of my own, each model calling a tool to list my Asana tasks.

The results were striking: xLAM completed tasks in 2.61 seconds with 100% success, while Qwen took 9.82 seconds with 92% success – nearly four times as long.

This experiment shows the speed gain, but there’s a trade-off: how much intelligence should live in the model versus in the tools themselves. This limited

With larger models like Qwen, tools can be simpler because the model has better error tolerance & can work around poorly designed interfaces. The model compensates for tool limitations through brute-force reasoning.

With smaller models, the model has less capacity to recover from mistakes, so the tools must be more robust & the selection logic more precise. This might seem like a limitation, but it’s actually a feature.

This constraint eliminates the compounding error rate of LLM chained tools. When large models make sequential tool calls, errors accumulate exponentially.

Small action models force better system design, keeping the best of LLMs and combining it with specialized models.

This architecture is more efficient, faster, & more predictable.

5,3K

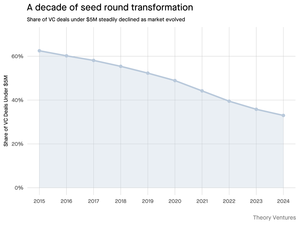

Why is the sub-$5 million seed round shrinking?

A decade ago, these smaller rounds formed the backbone of startup financing, comprising over 70% of all seed deals. Today, PitchBook data reveals that figure has plummeted to less than half.

The numbers tell a stark story. Sub-$5M deals declined from 62.5% in 2015 to 37.5% in 2024. This 29.5 percentage point drop fundamentally reshaped how startups raise their first institutional capital.

Three forces drove this transformation. We can decompose the decline to understand what reduced the small seed round & why it matters for founders today.

4,7K

This was so fun, Mario. Thanks for having me on the show to talk about everything going on in the market!

Mario Gabriele 🦊22.7.2025

Our latest episode with Tomasz Tunguz is live!

The Decade of Data

@ttunguz has spent almost two decades turning data into investment insights. After backing Looker, Expensify, and Monte Carlo at Redpoint Ventures, he launched @Theoryvc in 2022 with a bold vision: build an "investing corporation" where researchers, engineers, and operators sit alongside investors, creating real-time market maps and in-house AI tooling. His debut fund closed at $238 million, followed just 19 months later by a $450 million second fund. Centered on data, AI, and crypto infrastructure, Theory operates at the heart of today's most consequential technological shifts. We explore how data is reshaping venture capital, why traditional investment models are being disrupted, and what it takes to build a firm that doesn't just predict the future but actively helps create it.

Listen now:

• YouTube:

• Spotify:

• Apple:

A big thank you to the incredible sponsors that make the podcast possible:

✨ Brex — The banking solution for startups:

✨ Generalist+ — Essential intelligence for modern investors and technologists:

We explore:

→ How Theory’s “investing corporation” model works

→ Why crypto exchanges could create a viable path to public markets for small-cap software companies

→ The looming power crunch—why data centers could consume 15% of U.S. electricity within five years

→ Stablecoins’ rapid ascent as major banks route 5‑10% of U.S. dollars through them

→ Why Ethereum faces an existential challenge similar to AWS losing ground to Azure in the AI era

→ Why Tomasz believes today’s handful of agents will become 100+ digital co‑workers by year‑end

→ Why Meta is betting billions on AR glasses to change how we interact with machines

→ How Theory Ventures uses AI to accelerate market research, deal analysis, and investment decisions

…And much more!

8,01K

OpenAI receives on average 1 query per American per day.

Google receives about 4 queries per American per day.

Since then 50% of Google search queries have AI Overviews, this means at least 60% of US searches are now AI.

It’s taken a bit longer than I expected for this to happen. In 2024, I predicted that 50% of consumer search would be AI-enabled. (

But AI has arrived in search.

If Google search patterns are any indication, there’s a power law in search behavior. SparkToro’s analysis of Google search behavior shows the top third of Americans who search execute upwards of 80% of all searches - which means AI use isn’t likely evenly distributed - like the future.

Websites & businesses are starting to feel the impacts of this. The Economist’s piece “AI is killing the web. Can anything save it?” captures the zeitgeist in a headline. (

A supermajority of Americans now search with AI. The second-order effects from changing search patterns are coming in the second-half of this year & more will be asking, “What Happened to My Traffic?” (

AI is a new distribution channel & those who seize it will gain market share.

- William Gibson saw much further into the future!

- This is based on a midpoint analysis of the SparkToro chart, is a very simple analysis, & has some error as a result.

8,88K

Johtavat

Rankkaus

Suosikit

Ketjussa trendaava

Trendaa X:ssä

Viimeisimmät suosituimmat rahoitukset

Merkittävin