Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

2025 is the year of agents, & the key capability of agents is calling tools.

When using Claude Code, I can tell the AI to sift through a newsletter, find all the links to startups, verify they exist in our CRM, with a single command. This might involve two or three different tools being called.

But here’s the problem: using a large foundation model for this is expensive, often rate-limited, & overpowered for a selection task.

What is the best way to build an agentic system with tool calling?

The answer lies in small action models. NVIDIA released a compelling paperarguing that “Small language models (SLMs) are sufficiently powerful, inherently more suitable, & necessarily more economical for many invocations in agentic systems.”

I’ve been testing different local models to validate a cost reduction exercise. I started with a Qwen3:30b parameter model, which works but can be quite slow because it’s such a big model, even though only 3 billion of those 30 billion parameters are active at any one time.

The NVIDIA paper recommends the Salesforce xLAM model – a different architecture called a large action model specifically designed for tool selection.

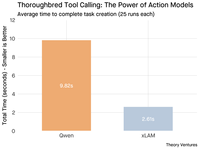

So, I ran a test of my own, each model calling a tool to list my Asana tasks.

The results were striking: xLAM completed tasks in 2.61 seconds with 100% success, while Qwen took 9.82 seconds with 92% success – nearly four times as long.

This experiment shows the speed gain, but there’s a trade-off: how much intelligence should live in the model versus in the tools themselves. This limited

With larger models like Qwen, tools can be simpler because the model has better error tolerance & can work around poorly designed interfaces. The model compensates for tool limitations through brute-force reasoning.

With smaller models, the model has less capacity to recover from mistakes, so the tools must be more robust & the selection logic more precise. This might seem like a limitation, but it’s actually a feature.

This constraint eliminates the compounding error rate of LLM chained tools. When large models make sequential tool calls, errors accumulate exponentially.

Small action models force better system design, keeping the best of LLMs and combining it with specialized models.

This architecture is more efficient, faster, & more predictable.

5,32K

Johtavat

Rankkaus

Suosikit