Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

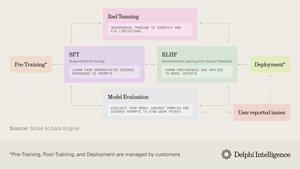

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

If you read one thing this week, would suggest the below AI report:

"From Data Foundries to World Models"

It interweaves data foundries, context engineering, RL environments, world models & more into an approachable, yet comprehensive essay on the current edge of AI.👇(0/12)

Full Article:

🧵below:

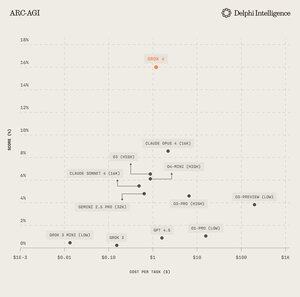

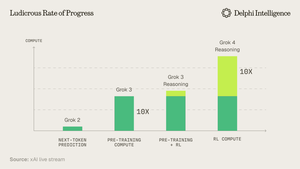

1) From the Grok 4 release, its clear we have not hit "a wall" in compute spend.

Source: @xai

2) Increasingly, data appears to be the short pole in the tent.

Source: @EpochAIResearch

3) Most of this spend is going towards generating high-quality data sets for post-training which is rapidly approaching 50% of compute budgets

4) This has led to an inflection in demand for "data foundries" like @scale_AI @HelloSurgeAI @mercor_ai & more who help source the necessary expertise & craft data pipelines essential for RL in non-verifiable domains...

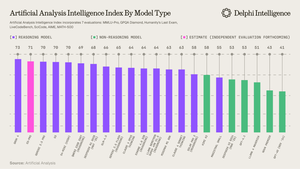

5) Which can help fuel ever more capable reasoning models, clearly the scaling paradigm du jour per @ArtificialAnlys

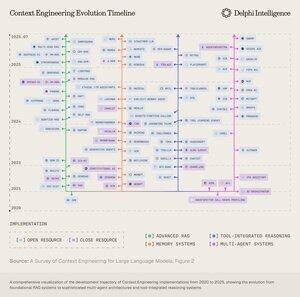

6) And yet, models are no longer constrained by IQ but by context. Prompt engineering has passed the baton to "Context Engineering" - a burgeoning field optimizing information payloads to LLMs.

7) The space is extremely dynamic but generally entails scaling context across 2 vectors:

1. Context Length: the computational & architectural challenges of processing ultra-long sequences

2. Multimodal: scaling context beyond text to truly multimodal environments.

8) Perhaps the ultimate expression of context eng. is crafting "RL environments" that perfectly mimic tasks on which RL can be run.

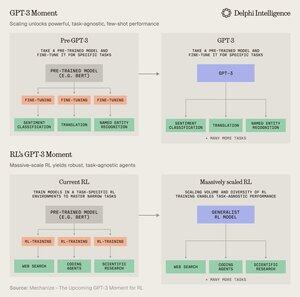

While data constrained today, @MechanizeWork believes we are heading towards a "GPT-3-esque" inflection with massively-scaled RL

9) However, crafting these environments is quite labor intensive and doesn't seem very "bitter-lesson-pilled".

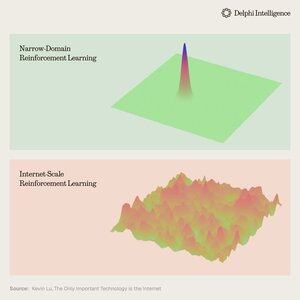

@_kevinlu is wondering if there is a way to harness the internet for post-training like was done for pre-training?

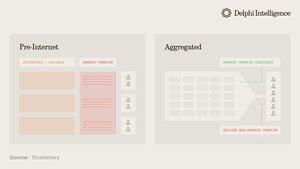

10) While an interesting thought, much of the internet has been captured by a few large ecosystems that are vertically integrated and rapidly conducting RL across their ingredients for even faster RL

(i.e. alpha evolve from @GoogleDeepMind )

11) Its unclear if these large companies view disparate RL environments as essential or just a temporary stop en route to full "world models," complete with digital twins of nearly every phenomena

Genie 3 from Google is certainly a nod in this direction:

5.8. klo 22.03

What if you could not only watch a generated video, but explore it too? 🌐

Genie 3 is our groundbreaking world model that creates interactive, playable environments from a single text prompt.

From photorealistic landscapes to fantasy realms, the possibilities are endless. 🧵

12) Which begs the question: are vertically integrated approaches to building synthetic intelligence with faster RL feedback loops destined to speed ahead and capture the market or can modular systems of latent compute, data and talent compete with effective orchestration?

11,86K

Johtavat

Rankkaus

Suosikit