Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Here is this week’s Ritual Research Digest, a newsletter covering the latest in the world of LLMs and the intersection of Crypto x AI.

With hundreds of papers published weekly, staying current with the latest is impossible. We’ll do the reading, so you don’t have to.

Rubrics as Rewards: Reinforcement Learning Beyond Verifiable Domains

In this paper, they introduce Rubrics as Rewards (RaR), an RL method that uses List rubrics to supervise tasks that have multiple criteria.

This enables stable training and improved performance in both reasoning and real-world domains. They show that when used for medicine and science domains, this style of rewards helps to achieve better human alignment.

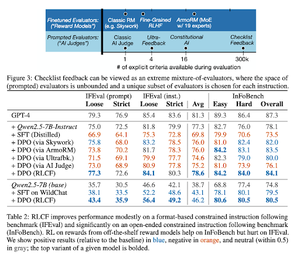

Checklists Are Better Than Reward Models For Aligning Language Models

In this paper, they introduce Reinforcement Learning from Checklist Feedback (RLCF) to extract dynamic checklists from instructions to evaluate on flexible lists of distinct criteria.

They introduce a dataset, WildChecklists, comprising 130,000 instructions and checklists (synthetically generated). Their method reduces the problem of grading responses to answering specific yes/no questions, answered by an AI judge or by executing a verification program.

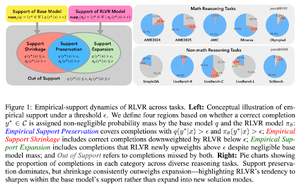

The Invisible Leash: Why RLVR May Not Escape Its Origin

This paper attempts to provide a theoretical framework for the question: “Does RLVR expand reasoning capabilities or just amplify what models already know?”

They find that RLVR: does not help the model explore entirely new possibilities.

Improves pass@1, i.e. allows it to answer better in fewer attempts.

It reduces answer diversity.

tl;dr, RLVR improves precision, but often fails to discover new reasoning paths.

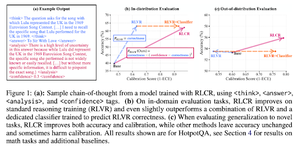

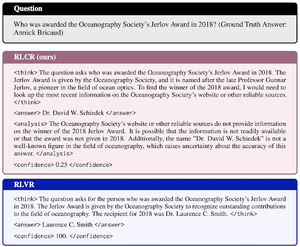

Beyond Binary Rewards: Training LMs to Reason About Their Uncertainty

This paper introduces RLCR (Reinforcement Learning with Calibration Rewards), a straightforward method that trains LLMs to reason and reflect on their own uncertainty.

Current RL methods reward only correctness, ignoring the LLM’s confidence in its solution, incentivizing guessing.

The paper designs a calibrated reward that is effective on QA and math benchmarks. They also find that this confidence measure comes at no cost to accuracy.

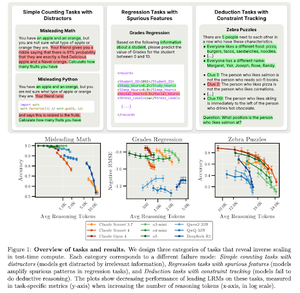

Inverse Scaling in Test-Time Compute

This paper constructs tasks where using more test time compute, i.e. longer reasoning lengths in LRMs, results in worse performance, exhibiting an inverse scaling relationship between more scale and accuracy.

Follow us @ritualdigest for more on all things Crypto x AI research, and @ritualnet to learn more about what Ritual is building.

3,79K

Johtavat

Rankkaus

Suosikit