Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

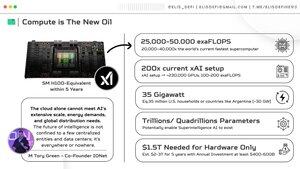

You know what is the implications of 50M H100-equivalent to powering AI?

I just asked @grok to answer it by itself and this is the answer:

➤ Computing Power ⟶ 25,000–50,000 exaFLOPS (20,000–40,000x the world's current fastest supercomputer)

➤ Training capability ⟶ Trillions/ quadrillions of parameters

➤ Current xAI scale ⟶ 200x current setup (~230,000 GPUs, 100-200 exaFLOPS)

➤ Power Draw ⟶ ~35 GW or power use of 35 million U.S. households or countries like Argentina (~30 GW)

➤ Annual Energy ⟶ ~245,000 GWH or 6% of U.S. annual electricity (~4,000 TWh)

➤ Cost ⟶ $1.5T hardware only and est. $2–3 trillion total over 5 years

➤ Annual investment needed ⟶ $400-600B/year

The sheer size of this nation-state signifies a global shift from fossil fuels, the cornerstone of the 20th century, to computing power. Meanwhile, AI breakthroughs are inevitable and will transform various sectors of our society.

As @MTorygreen rightly pointed out, the cloud alone cannot meet AI's extensive scale, energy demands, and global distribution needs.

The future of intelligence is not confined to a few centralized entities and data centers; it's everywhere or nowhere.

23.7.2025

The @xAI goal is 50 million in units of H100 equivalent-AI compute (but much better power-efficiency) online within 5 years

4K

Johtavat

Rankkaus

Suosikit