Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Andrew Curran

🏰 - I write about AI, mostly. Expect some strange sights.

1M! Huge upgrade.

Claude13.8. klo 00.05

Claude Sonnet 4 now supports 1 million tokens of context on the Anthropic API—a 5x increase.

Process over 75,000 lines of code or hundreds of documents in a single request.

18,69K

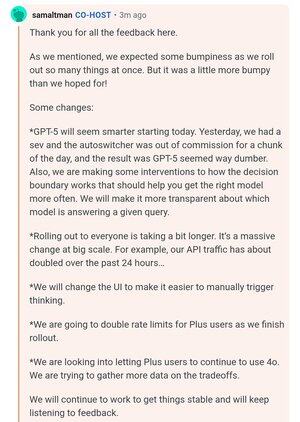

OpenAI reddit AMA thread:

Sam Altman: 'Yesterday, we had a sev and the autoswitcher was out of commission for a chunk of the day, and the result was GPT-5 seemed way dumber. Also, we are making some interventions to how the decision boundary works that should help you get the right model more often. We will make it more transparent about which model is answering a given query.'

106,42K

The reddit AMA is shaping up to be quite a scene, right now it's looking like villagers gathering outside Dr Frankenstein's castle.

Sentiment there, which is currently reflected in my timeline here, is broadly negative. People have many complaints; they don't like auto switching, they want to choose the model themselves. They want to know what model they are talking to each exchange. They want to go back to using 4o, o3 and o4-mini. Plus and Team users want to be able to select 4o and o3 from the legacy tab, which right now is only available for Pro subscribers. Plus plan users feel like they got a significant downgrade in reasoning time.

It hasn't even been 24 hours since launch, so we should give OAI some time to address these things. Maybe we will even get some answers in the AMA. GPT-5 seems extremely capable, but I feel like I need much more time to find its strengths and see the shape of it. I gave up yesterday because, honestly, things feel really unstable right now, but I will talk to it much more today in thinking mode. It's probably super capable in some weird direction that no one knows about yet, including the people who built it. But that's the fun in this game, finding those zones. I'll cover the AMA later today.

Tibor Blaho8.8. klo 19.57

A bit sad how the GPT-5 launch is going so far, especially after the long wait and high expectations

- The automatic switching between models (the router) seems partly broken/unreliable

- It's unclear exactly which model you're actually interacting with (standard or mini, reasoning level, old/new)

- Router issues probably contribute to many negative first impressions ("GPT-5 is bad"), especially when you can't easily tell which model is handling your requests - e.g. GPT-5 Thinking performs better at writing compared to standard GPT-5 ("think harder" prompts might also help)

- I'm seeing lots of complaints about legacy models being removed and usage limits for Plus (and also Free) accounts ("bring back 4o, o3, ...")

- Context window lengths aren't clear, nor how many and which exact GPT-5 models ChatGPT uses (standard, mini, new, old) - no indicator of which model is active at any moment

- The gradual rollout and current capacity constraints add to confusion, because not everyone can access the new models yet

- Mixed-up charts during the livestream provided plenty of free material for chart-crime and vibe-charting posts, which hasn't helped build trust or confidence

- And of course, the community had extremely high expectations across all fronts and modalities, as usual

I'm hoping improvements in the next hours/days, and today's AMA, can clarify some of these open issues and questions

100K

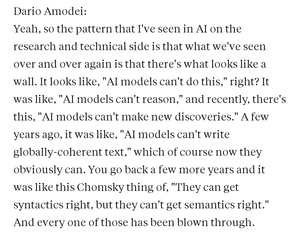

Dario responding to Dwarkesh. In Davos this year he said deep learning is like a river, and that every time it hits a rock it just finds a new path around it. Ilya Sutskever said the same thing at his talk at the end of last year; at every obstacle a new path appears.

John Collison6.8. klo 22.34

Anthropic is one of the fastest-growing businesses of all time. @DarioAmodei and I chatted about flying past $5b in ARR, Anthropic's focus on B2B, payback economics of individual models, talent wars, AI market structure, and lots more. And you all said that you all want longer episodes, so this is an hour!

Timestamps

00:00 Intro

00:51 What’s it like to start a company with your sibling?

01:43 Building Anthropic with 7 cofounders

02:53 $5 billion in ARR; vertical applications

07:18 Developing a platform-first company

10:09 Working with the DoD

11:13 Proving skeptics wrong about revenue projections

13:14 Capitalistic impulses of AI models

15:44 AI market structure and players

16:56 AI models as standalone P&Ls

20:48 The data wall and styles of learning

22:20 AI talent wars

26:04 Pitching Anthropic’s API business to investors

27:49 Cloud providers vs AI labs

29:06 AI customization and Claude for enterprise

33:01 Dwarkesh’s take on limitations

36:12 19th-century notion of vitalism

37:28 AI in medicine, customer service, and taxes

41:00 How to solve for hallucinations

42:41 The double-standard for AI mistakes

44:14 Evolving from researcher to CEO

46:59 Designing AGI-pilled products

47:57 AI-native UIs

50:10 Model progress and building products

52:23 Open-source models

54:43 Keeping Anthropic AGI-pilled

57:11 AI advancements vs safety regulations

01:02:04 How Dario uses AI

38,92K

Johtavat

Rankkaus

Suosikit

Ketjussa trendaava

Trendaa X:ssä

Viimeisimmät suosituimmat rahoitukset

Merkittävin