Trending topics

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Kirill Balakhonov | Nethermind

Creator of AuditAgent | AI x Crypto x Product | Building agentic economy

Why did OpenAI release models with open weights? To kill their own business... (or no?)

Yes, @OpenAI just released two models with open weights, which means developers can use them without paying OpenAI anything. Specifically, it's released under the most liberal commercial Apache 2.0 license. So why did OpenAI do this? There are several aspects.

But, I want to clarify that when a company releases a model with open weights, it doesn't mean the model is as open-source as open-source code that you can run yourself, like the @Linux operating system. No, with AI models it's a bit different. In particular, a model is a black box, a set of weights. And while you can test it on different tasks and see how it works, if you can't reproduce the training process, you can never know if there are any backdoors or security vulnerabilities that intentionally or accidentally ended up inside this model. So let's separate this from open source right away. Unfortunately, models with open source weights cannot be fully trusted (they can be chep though).

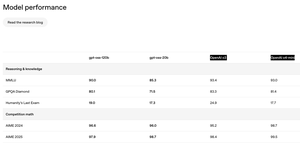

The other aspect I noticed, shown in the picture, is that the quality of open-source models isn't very different from OpenAI's flagship models that are only available through API. This is great! This is unexpected! You'd think how much money OpenAI could lose by allowing developers to use their models? However, this isn't everything. Models aren't all you get when using OpenAI through API. For example, when you use ChatGPT via UI and choose the o3 model, there's actually a complex agent working under the hood that, while using these models, has lots of logic written on top to really work well with your documents and tools. You don't access them via these models OpenAI published.

So why did OpenAI release models with open weights? First, their main competitors, particularly @Meta, @MistralAI, and @deepseek_ai (oh, and @Google), have already released competitive models with open weights that people use. And OpenAI's popularity among people who use open models isn't growing. However, for developers who need both models with open weights (for local/private calculations) alongside API-accessible models (for harder stuff), if they need both, they simply can't use OpenAI. It's easier for them to use their competitors like Google or DeepSeek.

Second, there's significant pressure from both users and regulators who want more openness. People are concerned that AI might get out of control or fall under the control of a narrow group of companies in silicon valley, and people want more transparency. Although I dare say that even just worrying about competition and declining sales, such a move toward openness will likely make OpenAI's business even bigger.

And third, of course, there's the joke about OpenAI's company name. Judging by how everything developed, the company named OpenAI was the most closed AI company among the leaders. This is funny in itself, but now this has changed. What do you think?

305

Most people do not understand at all how the replacement of people by AI works (or how it DOES NOT work). Even a ten-fold acceleration of everything a specialist does doesn’t automatically erase the job itself—it just rewrites the economics around it. When the effective price of a deliverable plummets, latent demand that used to sit on the shelf suddenly becomes viable. I’ve never met a product owner who thinks their engineers are shipping more features than the roadmap needs; the wish-list is always longer than the headcount allows. Make each feature ten times cheaper to build and you don’t cut teams by a factor of ten—you light up every “nice-to-have” that once looked unaffordable, plus entire green-field products no one bothered to scope.

A recent @Microsoft Research study on real-world Copilot usage underlines the same point. Users come for help drafting code or gathering facts, but the model ends up coaching, advising and teaching—folding brand-new kinds of labour into a single session. Professions aren’t monoliths; they’re bundles of subprocesses, each only partially (and imperfectly) covered by today’s models. As AI tools evolve, the scope of the role evolves with them, often expanding rather than shrinking.

Even in an AI smart contract auditor that we've built at @NethermindEth, despite its name, we target a very specific narrow part of the process: finding potential vulnerabilities. Meanwhile, security specialists use this as a tool and do much more complex and multifaceted work—formulating strategies, validating findings, correcting the AI, adding implicit context, communicating with developers, discovering hidden intentions and managing expectations.

So instead of tallying which jobs will “disappear,” it’s more useful to ask what problems become worth solving once the marginal cost of solving them falls off a cliff. History suggests the answer is “far more than we can staff for,” and that argues for a future where talent is redeployed and multiplied, not rendered obsolete.

341

Andrej Karpathy supports the introduction of a new term related to "context engineering" in AI Software development using LLMs.

And this term has long seemed very necessary. Every time I explain to people how we develop our Nethermind AuditAgent, one of the key aspects, besides using domain expertise (web3 security) and using the best available AI models (from OpenAI, Anthropic and Google), and tools for LLM, is precisely "context engineering".

There's sometimes an expression "context is the king," and it really is true. LLMs, whether huge advanced ones or optimized small LLMs, are a powerful tool, but like any tool, if it's in the wrong hands, you'll get much less promising results than you could if you work with them correctly. And context management (or engineering) is indeed a complex and not very well-described area that is constantly evolving, and it really emerged as an extension of the concept of prompt engineering, which already has some negative connotations.

Overall, Andrej listed the main aspects related to context engineering (on the second screenshot), but in each specific task, people achieve excellent results largely through trial and error, each time monotonously trying to select the right context elements that are really needed at this stage of problem-solving, collecting benchmarks for each stage, looking at metrics, dividing datasets into test, validation, and so on, and so forth.

What do you think about "context engineering"?

Andrej KarpathyJun 25, 2025

+1 for "context engineering" over "prompt engineering".

People associate prompts with short task descriptions you'd give an LLM in your day-to-day use. When in every industrial-strength LLM app, context engineering is the delicate art and science of filling the context window with just the right information for the next step. Science because doing this right involves task descriptions and explanations, few shot examples, RAG, related (possibly multimodal) data, tools, state and history, compacting... Too little or of the wrong form and the LLM doesn't have the right context for optimal performance. Too much or too irrelevant and the LLM costs might go up and performance might come down. Doing this well is highly non-trivial. And art because of the guiding intuition around LLM psychology of people spirits.

On top of context engineering itself, an LLM app has to:

- break up problems just right into control flows

- pack the context windows just right

- dispatch calls to LLMs of the right kind and capability

- handle generation-verification UIUX flows

- a lot more - guardrails, security, evals, parallelism, prefetching, ...

So context engineering is just one small piece of an emerging thick layer of non-trivial software that coordinates individual LLM calls (and a lot more) into full LLM apps. The term "ChatGPT wrapper" is tired and really, really wrong.

397

Yesterday we pushed a new product into production—I.R.I.S. (Integrity & Risk Intelligence Scanner), the first AI agent on X (formerly Twitter) that:

• Accepts a smart-contract repo or the address of a deployed contract

• Runs the code through our SaaS platform AuditAgent—already a market-leading solution used by external auditors and dev teams

• Publishes a full vulnerability report without leaving the social feed

Why?

• A friction-free channel. Developers get an audit where they’re already talking about code—no forms, no email threads.

• AuditAgent under the hood. Not just a “scanning engine,” but our flagship service powering real-world audits.

• Insight in ~30 minutes. Perfect triage before a deep manual review.

• Go-to-market boost. The Twitter agent showcases AuditAgent’s strength and funnels users to the full platform.

First 16 hours on the timeline

✨ 2.7 M impressions

🔥 49 K engagements

📊 85 % positive sentiment (214 tweets)

🛠️ ≈150 tweets sharing practical use cases

🔍 33 express audits

📋 38 454 lines of code scanned

⚠️ 377 vulnerabilities detected

A personal note

Exactly a year ago I joined Nethermind with what sounded like a risky hypothesis: "AI will become an essential part of smart-contract security, but only specialized, workflow-native tools will truly help professionals."

Twelve months later we have two products in production—AuditAgent ( and now I.R.I.S.(@UndercoverIRIS) —and a clear impact on Web3 security.

Huge thanks to the entire @NethermindEth AI team and to @virtuals_io. Persistence + a solid hypothesis + combined expertise = results the industry can see.

We’ll keep building tools that bring security to developers first—so Web3 gets safer with every commit.

761

Just back from AI Summit London—and the Enterprise-AI landscape looks very different up close

3 things that hit me:

1️⃣ Over-crowded product shelves.

Every booth promised a plug-and-play “AI platform” that magically fits any stack. But walk the floor long enough and you keep hearing the same blocker: legacy systems without APIs, scattered data, unclear business logic. Reality will be brutal for one-size-fits-all SaaS.

2️⃣ Custom-build shops quietly shine.

Agencies that combine deep domain consulting with rapid custom development have a clear edge. They can drop into the messy middle, stitch things together, and ship something that actually runs inside a client’s brittle infrastructure.

3️⃣ Custom work is getting cheaper, not pricier.

With code-gen models writing adapters, tests and scaffolding, senior devs now orchestrate rather than hand-type. Our experience of continuously using AI tools within the organization only confirms this.

The takeaway

The winners in Enterprise AI won’t be the flashiest “out-of-the-box” agents—they’ll be the nimble teams that can co-create solutions in real time, guided by the messy constraints of legacy tech.

297

Top

Ranking

Favorites

Trending onchain

Trending on X

Recent top fundings

Most notable