Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Andrew Ng

Co-Founder of Coursera; Stanford CS adjunct faculty. Former head of Baidu AI Group/Google Brain. #ai #machinelearning, #deeplearning #MOOCs

I'm thrilled to announce the definitive course on Claude Code, created with @AnthropicAI and taught by Elie Schoppik @eschoppik. If you want to use highly agentic coding - where AI works autonomously for many minutes or longer, not just completing code snippets - this is it.

Claude Code has been a game-changer for many developers (including me!), but there's real depth to using it well. This comprehensive course covers everything from fundamentals to advanced patterns.

After this short course, you'll be able to:

- Orchestrate multiple Claude subagents to work on different parts of your codebase simultaneously

- Tag Claude in GitHub issues and have it autonomously create, review, and merge pull requests

- Transform messy Jupyter notebooks into clean, production-ready dashboards

- Use MCP tools like Playwright so Claude can see what's wrong with your UI and fix it autonomously

Whether you're new to Claude Code or already using it, you'll discover powerful capabilities that can fundamentally change how you build software.

I'm very excited about what agentic coding lets everyone now do. Please take this course!

679,43K

Announcing a new Coursera course: Retrieval Augmented Generation (RAG)

You'll learn to build high performance, production-ready RAG systems in this hands-on, in-depth course created by and taught by @ZainHasan6, experienced AI and ML engineer, researcher, and educator.

RAG is a critical component today of many LLM-based applications in customer support, internal company Q&A systems, even many of the leading chatbots that use web search to answer your questions. This course teaches you in-depth how to make RAG work well.

LLMs can produce generic or outdated responses, especially when asked specialized questions not covered in its training data. RAG is the most widely used technique for addressing this. It brings in data from new data sources, such as internal documents or recent news, to give the LLM the relevant context to private, recent, or specialized information. This lets it generate more grounded and accurate responses.

In this course, you’ll learn to design and implement every part of a RAG system, from retrievers to vector databases to generation to evals. You’ll learn about the fundamental principles behind RAG and how to optimize it at both the component and whole-system levels.

As AI evolves, RAG is evolving too. New models can handle longer context windows, reason more effectively, and can be parts of complex agentic workflows. One exciting growth area is Agentic RAG, in which an AI agent at runtime (rather than it being hardcoded at development time) autonomously decides what data to retrieve, and when/how to go deeper. Even with this evolution, access to high-quality data at runtime is essential, which is why RAG is a key part of so many applications.

You'll learn via hands-on experiences to:

- Build a RAG system with retrieval and prompt augmentation

- Compare retrieval methods like BM25, semantic search, and Reciprocal Rank Fusion

- Chunk, index, and retrieve documents using a Weaviate vector database and a news dataset

- Develop a chatbot, using open-source LLMs hosted by Together AI, for a fictional store that answers product and FAQ questions

- Use evals to drive improving reliability, and incorporate multi-modal data

RAG is an important foundational technique. Become good at it through this course!

Please sign up here:

107,03K

My talk at YC Startup School on how to build AI startups. I share tips from @AI_Fund on how to use AI to build fast. Let me know what you think!

Y Combinator10.7.2025

Andrew Ng (@AndrewYNg) on how startups can build faster with AI.

At AI Startup School in San Francisco.

00:31 - The Importance of Speed in Startups

01:13 - Opportunities in the AI Stack

02:06 - The Rise of Agent AI

04:52 - Concrete Ideas for Faster Execution

08:56 - Rapid Prototyping and Engineering

17:06 - The Role of Product Management

21:23 - The Value of Understanding AI

22:33 - Technical Decisions in AI Development

23:26 - Leveraging Gen AI Tools for Startups

24:05 - Building with AI Building Blocks

25:26 - The Importance of Speed in Startups

26:41 - Addressing AI Hype and Misconceptions

37:35 - AI in Education: Current Trends and Future Directions

39:33 - Balancing AI Innovation with Ethical Considerations

41:27 - Protecting Open Source and the Future of AI

146,5K

Agentic Document Extraction now supports field extraction! Many doc extraction use cases extract specific fields from forms and other structured documents. You can now input a picture or PDF of an invoice, request the vendor name, item list, and prices, and get back the extracted fields. Or input a medical form and specify a schema to extract patient name, patient ID, insurance number, etc.

One cool feature: If you don't feel like writing a schema (json specification of what fields to extract) yourself, upload one sample document and write a natural language prompt saying what you want, and we automatically generate a schema for you.

See the video for details!

178,28K

New Course: Post-training of LLMs

Learn to post-train and customize an LLM in this short course, taught by @BanghuaZ, Assistant Professor at the University of Washington @UW, and co-founder of @NexusflowX.

Training an LLM to follow instructions or answer questions has two key stages: pre-training and post-training. In pre-training, it learns to predict the next word or token from large amounts of unlabeled text. In post-training, it learns useful behaviors such as following instructions, tool use, and reasoning.

Post-training transforms a general-purpose token predictor—trained on trillions of unlabeled text tokens—into an assistant that follows instructions and performs specific tasks. Because it is much cheaper than pre-training, it is practical for many more teams to incorporate post-training methods into their workflows than pre-training.

In this course, you’ll learn three common post-training methods—Supervised Fine-Tuning (SFT), Direct Preference Optimization (DPO), and Online Reinforcement Learning (RL)—and how to use each one effectively. With SFT, you train the model on pairs of input and ideal output responses. With DPO, you provide both a preferred (chosen) and a less preferred (rejected) response and train the model to favor the preferred output. With RL, the model generates an output, receives a reward score based on human or automated feedback, and updates the model to improve performance.

You’ll learn the basic concepts, common use cases, and principles for curating high-quality data for effective training. Through hands-on labs, you’ll download a pre-trained model from Hugging Face and post-train it using SFT, DPO, and RL to see how each technique shapes model behavior.

In detail, you’ll:

- Understand what post-training is, when to use it, and how it differs from pre-training.

- Build an SFT pipeline to turn a base model into an instruct model.

- Explore how DPO reshapes behavior by minimizing contrastive loss—penalizing poor responses and reinforcing preferred ones.

- Implement a DPO pipeline to change the identity of a chat assistant.

- Learn online RL methods such as Proximal Policy Optimization (PPO) and Group Relative Policy Optimization (GRPO), and how to design reward functions.

- Train a model with GRPO to improve its math capabilities using a verifiable reward.

Post-training is one of the most rapidly developing areas of LLM training. Whether you’re building a high-accuracy context-specific assistant, fine-tuning a model's tone, or improving task-specific accuracy, this course will give you experience with the most important techniques shaping how LLMs are post-trained today.

Please sign up here:

109,54K

I’d like to share a tip for getting more practice building with AI — that is, either using AI building blocks to build applications or using AI coding assistance to create powerful applications quickly: If you find yourself with only limited time to build, reduce the scope of your project until you can build something in whatever time you do have.

If you have only an hour, find a small component of an idea that you're excited about that you can build in an hour. With modern coding assistants like Anthropic’s Claude Code (my favorite dev tool right now), you might be surprised at how much you can do even in short periods of time! This gets you going, and you can always continue the project later.

To become good at building with AI, most people must (i) learn relevant techniques, for example by taking online AI courses, and (ii) practice building. I know developers who noodle on ideas for months without actually building anything — I’ve done this too! — because we feel we don’t have time to get started. If you find yourself in this position, I encourage you to keep cutting the initial project scope until you identify a small component you can build right away.

Let me illustrate with an example — one of my many small, fun weekend projects that might never go anywhere, but that I’m glad I did.

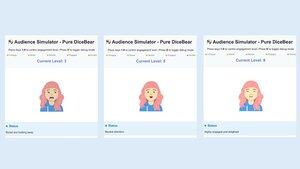

Here’s the idea: Many people fear public speaking. And public speaking is challenging to practice, because it's hard to organize an audience. So I thought it would be interesting to build an audience simulator to provide a digital audience of dozens to hundreds of virtual people on a computer monitor and let a user practice by speaking to them.

One Saturday afternoon, I found myself in a coffee shop with a couple of hours to spare and decided to give the audience simulator a shot. My familiarity with graphics coding is limited, so instead of building a complex simulator of a large audience and writing AI software to simulate appropriate audience responses, I decided to cut scope significantly to (a) simulating an audience of one person (which I could replicate later to simulate N persons), (b) omitting AI and letting a human operator manually select the reaction of the simulated audience (similar to Wizard of Oz prototyping), and (c) implementing the graphics using a simple 2D avatar.

Using a mix of several coding assistants, I built a basic version in the time I had. The avatar could move subtly and blink, but otherwise it used basic graphics. Even though it fell far short of a sophisticated audience simulator, I am glad I built this. In addition to moving the project forward and letting me explore different designs, it advanced my knowledge of basic graphics. Further, having this crude prototype to show friends helped me get user feedback that shaped my views on the product idea.

I have on my laptop a list of ideas of things that I think would be interesting to build. Most of them would take much longer than the handful of hours I might have to try something on a given day, but by cutting their scope, I can get going, and the initial progress on a project helps me decide if it’s worth further investment. As a bonus, hacking on a wide variety of applications helps me practice a wide range of skills. But most importantly, this gets an idea out of my head and potentially in front of prospective users for feedback that lets the project move faster.

[Original text: ]

295,52K

New Course: ACP: Agent Communication Protocol

Learn to build agents that communicate and collaborate across different frameworks using ACP in this short course built with @IBMResearch's BeeAI, and taught by @sandi_besen, AI Research Engineer & Ecosystem Lead at IBM, and @nicholasrenotte, Head of AI Developer Advocacy at IBM.

Building a multi-agent system with agents built or used by different teams and organizations can become challenging. You may need to write custom integrations each time a team updates their agent design or changes their choice of agentic orchestration framework.

The Agent Communication Protocol (ACP) is an open protocol that addresses this challenge by standardizing how agents communicate, using a unified RESTful interface that works across frameworks. In this protocol, you host an agent inside an ACP server, which handles requests from an ACP client and passes them to the appropriate agent. Using a standardized client-server interface allows multiple teams to reuse agents across projects. It also makes it easier to switch between frameworks, replace an agent with a new version, or update a multi-agent system without refactoring the entire system.

In this course, you’ll learn to connect agents through ACP. You’ll understand the lifecycle of an ACP Agent and how it compares to other protocols, such as MCP (Model Context Protocol) and A2A (Agent-to-Agent). You’ll build ACP-compliant agents and implement both sequential and hierarchical workflows of multiple agents collaborating using ACP.

Through hands-on exercises, you’ll build:

- A RAG agent with CrewAI and wrap it inside an ACP server.

- An ACP Client to make calls to the ACP server you created.

- A sequential workflow that chains an ACP server, created with Smolagents, to the RAG agent.

- A hierarchical workflow using a router agent that transforms user queries into tasks, delegated to agents available through ACP servers.

- An agent that uses MCP to access tools and ACP to communicate with other agents.

You’ll finish up by importing your ACP agents into the BeeAI platform, an open-source registry for discovering and sharing agents.

ACP enables collaboration between agents across teams and organizations. By the end of this course, you’ll be able to build ACP agents and workflows that communicate and collaborate regardless of framework.

Please sign up here:

88,24K

Introducing "Building with Llama 4." This short course is created with @Meta @AIatMeta, and taught by @asangani7, Director of Partner Engineering for Meta’s AI team.

Meta’s new Llama 4 has added three new models and introduced the Mixture-of-Experts (MoE) architecture to its family of open-weight models, making them more efficient to serve.

In this course, you’ll work with two of the three new models introduced in Llama 4. First is Maverick, a 400B parameter model, with 128 experts and 17B active parameters. Second is Scout, a 109B parameter model with 16 experts and 17B active parameters. Maverick and Scout support long context windows of up to a million tokens and 10M tokens, respectively. The latter is enough to support directly inputting even fairly large GitHub repos for analysis!

In hands-on lessons, you’ll build apps using Llama 4’s new multimodal capabilities including reasoning across multiple images and image grounding, in which you can identify elements in images. You’ll also use the official Llama API, work with Llama 4’s long-context abilities, and learn about Llama’s newest open-source tools: its prompt optimization tool that automatically improves system prompts and synthetic data kit that generates high-quality datasets for fine-tuning.

If you need an open model, Llama is a great option, and the Llama 4 family is an important part of any GenAI developer's toolkit. Through this course, you’ll learn to call Llama 4 via API, use its optimization tools, and build features that span text, images, and large context.

Please sign up here:

57,44K

Johtavat

Rankkaus

Suosikit

Ketjussa trendaava

Trendaa X:ssä

Viimeisimmät suosituimmat rahoitukset

Merkittävin